|

Now, if you've written much software, you may be wondering what the big deal is. After all, every operating system and pretty much every programming language has built-in functions called random() or rand() that generate random numbers for you using deep, complex mathematics that, thankfully, we programmers don't need to understand in order to use the functions. Unfortunately, despite their names, these functions do not produce random numbers in the sense that we have used the term here. In fact, the numbers computed by these so-called pseudo-random number generators (PRNGs) are completely, 100% predictable. They do have a sometimes useful property called statistical uniformity or statistical randomness, but they are in no way truly random. Think of the successive digits of the number pi, which are statistically random, but easily predictable by anyone who can divide the circumference of a circle by its diameter.

The extensive use of such PRNGs in commercial and government cryptography products has caused immeasurable harm, the extent of which is only just beginning to emerge. A few weeks ago, it was revealed that the default and recommended PRNG algorithm, whose formidable name is Dual Elliptic Curve Deterministic Random Bit Generator, used by many commercial companies in the US and abroad contains a backdoor vulnerability that was deliberately inserted by its inventor. That inventor, the US National Security Agency or NSA, now has the capability to predict pseudo-random sequences for any software that uses the tainted PRNG. If this doesn't seem all that important to you, consider this. According to the New York Times article,

The agency has circumvented or cracked much of the encryption, or digital scrambling, that guards global commerce and banking systems, protects sensitive data like trade secrets and medical records, and automatically secures the e-mails, Web searches, Internet chats and phone calls of Americans and others around the world...Incidentally, the tainted algorithm was adopted so widely, despite its known origins in the NSA, because another US government agency, the National Institute of Standards and Technology, NIST, blessed it as a standard and recommended that it be used wherever possible. Government contractors and vendors supplying cryptography products to the federal government were then required to be certified, which certification included the requirement to use a certain PRNG algorithm.

PRNGs aren't all bad. There are many applications, e.g. Monte Carlo simulation and automated testing, where a repeatable stream of statistically random numbers is just what the doctor ordered. But there are other uses, cryptography being one, for which only the best, genuinely random, numbers will do. Another area where universally unpredictable, truly random numbers are highly desirable is for UUIDs - Universally Unique IDentifiers - used, for example, in massively scalable digital storage clusters that have global namespaces. Here, each stored object, and there may be billions or trillions of them, is assigned a UUID that is different from every other object's UUID. Not only that, it is different from every other UUID ever used or that ever will be used. Thus the term "universal." Such UUIDs become the true names for these objects, in the sense discussed in Satnam and other Dangers.

There are several different ways to generate UUIDs that are guaranteed to be unique. Most of these involve some sort of centralized naming authority. For example, the namespace for Internet URLs is ultimately managed by the Internet Assigned Numbers Authority, IANA. But deterministically generating UUIDs in this way is slow and requires a great deal of cooperation. And did I mention there's still some pseudo-government entity controlling the namespace?

An alternative approach, using large, truly random numbers for UUIDs, can't guarantee uniqueness, but it can make it very, very unlikely that two different objects will receive the same UUID (a "collision"). And if the calculable probability of a collision of truly random UUIDs is smaller than the probability of an undetected hardware or software failure in the deterministic algorithms (see Provably Probable is Better than Probably Provable), then the truly random approach has an important practical advantage over the "deterministic" ones. Namely, each process in a distributed network can, in theory, generate UUIDs independently of every other process; no cooperation with other processes or with a central naming authority is required.

Which brings us, finally, to the main topic of this chapter. How can we program a modern digital computer to do something that is universally unpredictable — truly random? How can we teach a robot to sneeze?

Consider the following programming problem. We have four server-class computers, no keyboards or mice attached, all plugged into the same power strip. The servers are all identical - same hardware, same memory, same peripherals. All are networked together through a single network switch. The power strip is switched on so that all four computers start at the same moment and they all boot the exact same operating system, say Linux, and then synchronize their clocks. They then auto-execute an application program whose sole task is to generate a 128-bit truly random number and share it with the other servers. Each server stores the four numbers generated in this round, then compares its number against all previously recorded ones. If its generated number is different from every other number ever generated by any of the servers, it reboots itself and the whole process starts over again. If there is ever a duplicate number generated, an alarm goes off, lights flash, sirens wail, and the V.P. of Engineering gets a frantic phone call in the middle of an otherwise relaxing and much needed vacation in Taos, New Mexico.

There are several different ways to solve this problem, and some are better than others. A naive (meaning terrible) approach would be to simply look around the system to find "random" or unpredictable values, then hash them together to form a candidate number. Simply scrambling the value of the real-time clock would generate a number that is statistically unique across reboots, but every server would likely generate the same value, since their clocks are synchronized. You could mix in other more or less random values, like the serial numbers of peripherals, if available, or the MAC addresses of the network interface cards. But these numbers are static across reboots, so the same machine would find the same values each time and from them generate the same candidate number. Also, these numbers are unique only if you trust the central naming authorities, usually manufacturers associations, that assigned them. Specifically in the case of MAC addresses for NICs, manufacturers are assigned blocks of numbers they then use in serial fashion for their network cards. Since our servers come from the same manufacturer and perhaps the same batch, there is a good chance the MACs are very close to one another. Contrary to common belief, MAC addresses can also be reused over time.

A better all-around approach is to use measured timings for hardware-related events, which relies in one way or another on detecting and measuring small discrepancies (a.k.a failures) in the hardware. The Linux operating system (and most commercial Unix variants) includes a random number generator like this in its kernel. This mechanism measures tiny variations in interrupt timings in various hardware drivers, including keyboard, mouse, hard disk, and network drivers, to accumulate an "entropy pool" of randomness. When an application wishes to use a random number, it simply reads from a special device called /dev/random and asks for some number of bytes of randomness. If there is sufficient entropy in the pool at the time of the request, the kernel returns the requested number of bytes of randomness and reduces the pool by the same number of bytes. If there is not sufficient entropy yet, the read from /dev/random will block until enough randomness accumulates.

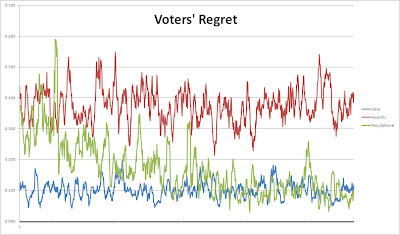

The word entropy here is an allusion to Claude Shannon's idea of entropy in communication theory. The actual calculation of this supposed measure of randomness in the Linux random number generator is somewhat mysterious and ill-understood. Even though the source code for it is publicly available, there seems to be no good explanation for why it might work, in theory. Nevertheless, it is clear that the best sources for randomness are the human interfaces; the keyboard and mouse. Ironically, the best way for a robot to do something unexpected is to ask a human! For our sample network of servers, which is based on a real world cluster of storage servers I once helped design, there are no human interfaces from which to draw randomness. Analysis has shown that other sources, notably hard disk drivers, are very poor sources of randomness, generating something like one bit of entropy per minute of operation. It seems the Linux random number pool is exceedingly shallow, which is once again ironic given that we know randomness is not a kiddie pool in the middle of our otherwise deterministic universe, but rather a vast ocean of unpredictability surrounding us all.

There is a way to draw randomness directly from that ocean by using specialized devices called Quantum Random Number Generators, QRNGs. As the name suggests, these devices tap into quantum-level phenomena, including the mother of all weirdness, quantum entanglement, to pull randomness from between the grid lines of the rational universe. Unfortunately, these devices are generally not a part of standard, off-the-shelf, computers like the ones we have conjectured for our example network. Given the importance of this kind of functionality for effective cryptography, that's a little odd, don't you think? If I were a conspiracy theorist, I would suspect the NSA has had a part in this omission.

But the sad fact is, to solve our proposed puzzle using only commodity servers, we cannot rely on fancy QRNGs, at least not today. The question now boils down to this: How can we tap into the realm of quantum-level events using ordinary hardware?

|

Luckily, there is a second clock within most every modern computer, one that uses an independent crystal oscillator as its frequency source — the real-time clock or RTC. It is quite important for different computers within a network to agree on what time it is, or at least on how long a second is. To do this, most computers use a much lower-frequency, and less noisy, crystal oscillator operating down near the 100-thousand cycles per second range to measure the passage of time. Of course, this second crystal is subject to the same kind of noise as the CPU oscillator, though less so because of its lower frequency. But that's good for our purposes! We can simply count how many CPU clock cycles occur within each RTC cycle, subtract successive samples and contribute the least-significant few digits to the entropy pool. Conceptually, this sampling can be done by executing a simple instruction loop a few million times and asking the RTC how long it took to complete. Given the realities of things like hardware interrupts and process scheduling, the details are of course more complicated, but the solution remains practical and it works for almost any commercially-available digital computer.

Computers do exactly what we tell them to do. When they don't, we typically think of it as a failure. But unexpected does not always mean faulty. Not only is it useful for software developers to plan to fail when designing complex systems, it is sometimes necessary to actually elicit and embrace failures, to swim briefly in the ocean of randomness. Randomness, one could argue, is at the very core of humanity and human creativity, and it isn't possible to program a computer to do something completely unexpected. To teach a robot to sneeze, we must allow her to surprise us.